Best

Data Wrangling

Products

Data Wrangling, also known as Data Preprocessing or Data Cleaning, refers to the process of transforming and preparing raw, unstructured, or inconsistent data into a structured and usable format for analysis. It involves tasks such as data cleaning, data integration, data transformation, and data enrichment. Read more

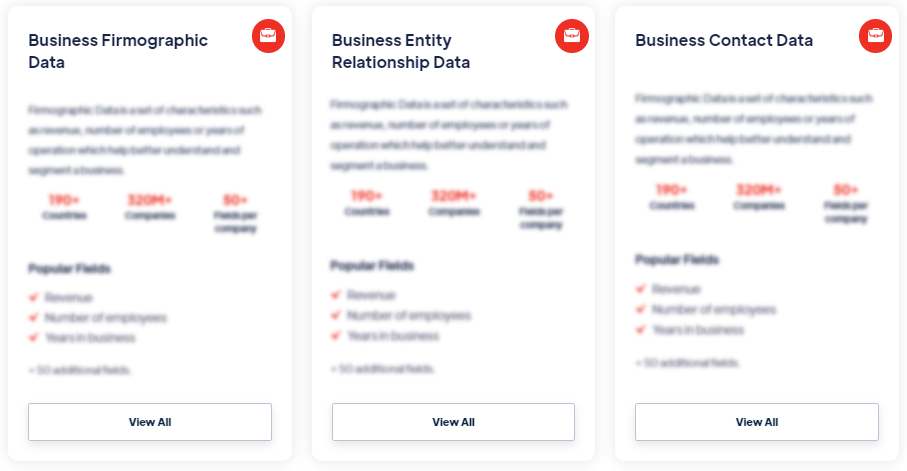

Our Data Integrations

Request Data Sample for

Data Wrangling

Browse the Data Marketplace

Frequently Asked Questions

1. What is Data Wrangling?

Data Wrangling,

also known as Data Preprocessing or Data Cleaning, refers to the

process of transforming and preparing raw, unstructured, or

inconsistent data into a structured and usable format for

analysis. It involves tasks such as data cleaning, data

integration, data transformation, and data enrichment.

2. What are the key steps involved in Data Wrangling?

The key steps involved in Data Wrangling include data

collection, data cleaning, data integration, data

transformation, and data validation. Data collection involves

gathering data from various sources. Data cleaning involves

identifying and fixing errors, inconsistencies, and missing

values in the data. Data integration combines data from multiple

sources into a single dataset. Data transformation involves

restructuring, aggregating, or encoding data to make it suitable

for analysis. Data validation ensures the accuracy,

completeness, and consistency of the wrangled data.

3. What are the challenges in Data Wrangling?

Data Wrangling can be challenging due to various factors, such

as data inconsistencies, missing values, data duplication, data

format differences, and data scalability. Dealing with messy and

inconsistent data requires careful data cleaning techniques.

Handling missing values and making decisions on how to impute or

handle them can be complex. Integrating data from different

sources with varying formats and structures can pose challenges.

Ensuring the scalability of data wrangling processes as the

dataset size grows can also be a consideration.

4. What are the common techniques used in Data Wrangling?

Common techniques used in Data Wrangling include data cleaning

methods like removing duplicates, correcting errors, and

handling missing values. Data integration techniques involve

merging, joining, or appending datasets. Data transformation

techniques include filtering, sorting, aggregating, and

reshaping data. Other techniques may involve data

standardization, data normalization, and data enrichment through

the use of external data sources.

5. What tools and technologies are commonly used in Data

Wrangling?

Various tools and technologies are available for Data

Wrangling, including programming languages like Python and R,

which provide libraries and packages for data manipulation. Data

wrangling tools like OpenRefine, Trifacta Wrangler, and KNIME

offer visual interfaces and automation capabilities. Database

management systems, such as SQL, provide querying and

manipulation functionalities. Data integration tools like

Informatica and Talend assist in integrating data from multiple

sources. Business intelligence tools like Tableau and Power BI

often include data preparation features as well.

6. What are the benefits of effective Data Wrangling?

Effective Data Wrangling leads to improved data quality,

enhanced data analysis, increased productivity, and better

decision-making. By addressing data inconsistencies and errors,

it improves the accuracy and reliability of the data.

Well-structured and clean data facilitates more accurate and

meaningful analysis. Efficient Data Wrangling processes save

time and effort, allowing analysts to focus on data analysis

rather than data cleaning. Ultimately, the insights derived from

properly wrangled data support informed decision-making and

drive business outcomes.

7. What are the best practices for Data Wrangling?

Some best practices for Data Wrangling include understanding

the data requirements, documenting the wrangling process,

creating data cleaning and transformation scripts, performing

exploratory data analysis, validating and testing the wrangled

data, and maintaining data lineage and version control. It is

important to have a clear understanding of the data and the

desired outcomes before starting the wrangling process.

Documentation helps in maintaining transparency and

reproducibility. Creating reusable scripts ensures consistency

and efficiency. Exploratory data analysis aids in understanding

the data and identifying potential issues. Validating and

testing the wrangled data ensures its quality and integrity.

Lastly, maintaining data lineage and version control helps in

tracking changes and managing data updates.