Best

Decision Tree

Products

A decision tree is a popular supervised machine learning algorithm used for classification and regression tasks. It is a flowchart-like structure where each internal node represents a feature or attribute, each branch represents a decision rule, and each leaf node represents the outcome or prediction. Read more

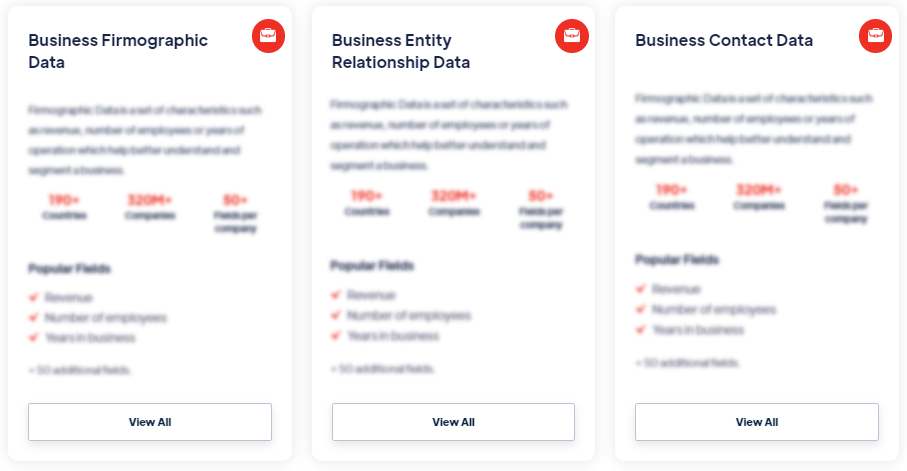

Our Data Integrations

Request Data Sample for

Decision Tree

Browse the Data Marketplace

Frequently Asked Questions

1. What is a Decision Tree?

A decision

tree is a popular supervised machine learning algorithm used for

classification and regression tasks. It is a flowchart-like

structure where each internal node represents a feature or

attribute, each branch represents a decision rule, and each leaf

node represents the outcome or prediction.

2. What are the key components of a Decision Tree?

The key components of a decision tree include the root node,

internal nodes, branches, leaf nodes, feature/attribute,

decision rules, and prediction/outcome.

3. What are the advantages of using Decision Trees?

Advantages of decision trees include interpretability, handling

nonlinear relationships, feature importance measurement,

robustness to outliers and missing values, and versatility in

classification and regression tasks.

4. What are the limitations of Decision Trees?

Limitations of decision trees include overfitting, lack of

smoothness in decision boundaries, instability with small

changes in the data, and challenges in handling categorical

variables.

5. What are some applications of Decision Trees?

Decision trees are commonly used for classification tasks,

regression tasks, feature selection, anomaly detection, and

decision support systems.

6. What are the steps involved in building a Decision

Tree?

The steps involved in building a decision tree include data

preprocessing, tree construction, recursive partitioning,

pruning (optional), and evaluation.

7. What are some popular algorithms for Decision Tree

construction?

Popular algorithms for decision tree construction include ID3,

C4.5, and CART, which use different splitting criteria and

handle categorical and continuous target variables.