Best

Feature Engineering - A Complete Introduction

Products

Feature Engineering refers to the process of selecting, transforming, and creating features from raw data to represent the underlying problem accurately. It involves domain knowledge, statistical techniques, and creativity to extract meaningful information and represent it in a format that machine learning models can understand. Read more

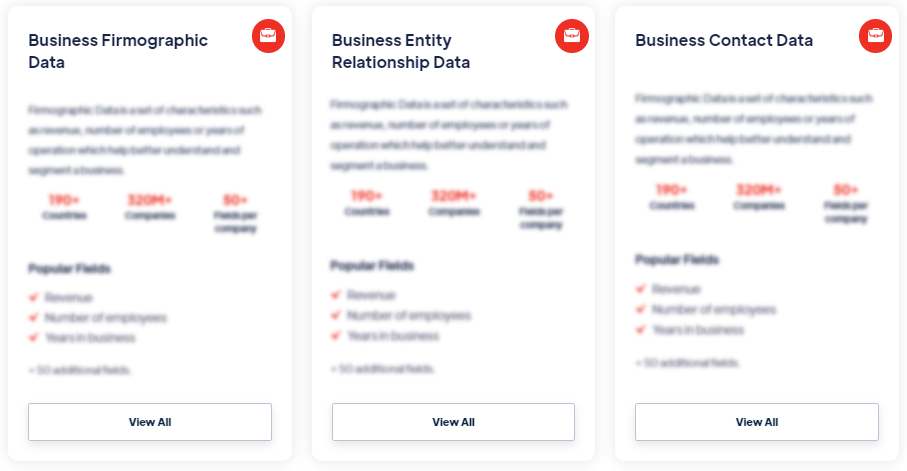

Our Data Integrations

Request Data Sample for

Feature Engineering - A Complete Introduction

Browse the Data Marketplace

Frequently Asked Questions

1. What is Feature Engineering?

Feature

Engineering refers to the process of selecting, transforming,

and creating features from raw data to represent the underlying

problem accurately. It involves domain knowledge, statistical

techniques, and creativity to extract meaningful information and

represent it in a format that machine learning models can

understand.

2. Why is Feature Engineering important?

Feature Engineering is essential because the performance of

machine learning models heavily relies on the quality and

relevance of the features used. Well-engineered features can

enhance the model's ability to capture complex

relationships, reduce noise, and improve generalization. It can

also help address issues such as missing data, outliers, and

irrelevant variables.

3. What are the techniques used in Feature Engineering?

There are various techniques employed in Feature Engineering,

including feature extraction, feature transformation, feature

selection, and feature creation. These techniques involve tasks

such as extracting statistical measures from data, transforming

data distributions, selecting relevant features, and generating

new features based on existing ones.

4. How to perform Feature Engineering?

Performing Feature Engineering requires a good understanding of

the problem domain and the data at hand. It involves steps such

as exploratory data analysis to understand the data, data

cleaning to handle missing values and outliers, feature

transformation and scaling to make features suitable for models,

feature creation based on domain knowledge, and feature

selection to identify the most relevant features.

5. What are the considerations in Feature Engineering?

Considerations in Feature Engineering include domain knowledge,

data quality, avoiding overfitting, ensuring model

interpretability, and considering computational efficiency.

Domain knowledge helps in identifying relevant features, while

data quality ensures accurate feature engineering. Overfitting

should be avoided by focusing on meaningful information, and

model interpretability can guide the creation of interpretable

features. Computational efficiency should also be considered for

resource management.

6. How does Feature Engineering impact model performance?

Well-performed Feature Engineering can significantly impact

model performance. It can lead to better predictive accuracy,

improved model robustness, faster convergence during training,

and reduced risk of overfitting. By capturing relevant

information and reducing noise, feature engineering enhances the

model's ability to extract meaningful patterns and make

accurate predictions.

7. What are some popular tools and libraries for Feature

Engineering?

Python libraries such as pandas, NumPy, scikit-learn, and

TensorFlow are popular for data manipulation and feature

engineering tasks. Additionally, there are automated feature

engineering libraries like Featuretools and AutoFeat that can

assist in automating parts of the feature engineering process.