Best

Feature Selection

Products

Feature selection is the process of selecting a subset of features from a larger set of available features. The goal is to identify the most relevant features that have the most significant impact on the target variable or the model's predictive performance. By selecting a subset of informative features, feature selection can simplify the model and improve its efficiency and effectiveness. Read more

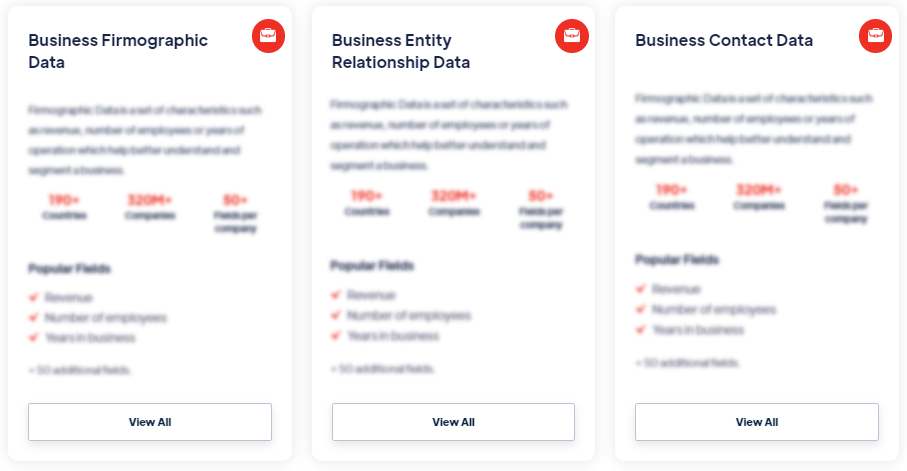

Our Data Integrations

Request Data Sample for

Feature Selection

Browse the Data Marketplace

Frequently Asked Questions

1. What is Feature Selection?

Feature

selection is the process of selecting a subset of features from

a larger set of available features. The goal is to identify the

most relevant features that have the most significant impact on

the target variable or the model's predictive performance.

By selecting a subset of informative features, feature selection

can simplify the model and improve its efficiency and

effectiveness.

2. Why is Feature Selection important?

Feature selection offers several benefits in machine learning.

It helps to reduce the dimensionality of the dataset, which can

lead to faster training and prediction times. Additionally,

feature selection can mitigate the risk of overfitting by

focusing on the most informative features and reducing the

influence of noisy or irrelevant features. Moreover, feature

selection enhances model interpretability by identifying the

most important variables that drive the predictions.

3. What are the approaches to Feature Selection?

There are different approaches to feature selection, including

filter methods, wrapper methods, and embedded methods. Filter

methods evaluate the relevance of features based on statistical

measures or information-theoretic criteria. Wrapper methods use

a specific machine learning algorithm to assess the quality of

features by evaluating their impact on the model's

performance. Embedded methods incorporate feature selection

within the model training process itself.

4. What are the criteria for evaluating feature

importance?

Various criteria can be used to evaluate feature importance,

such as statistical measures like correlation or mutual

information, feature importance scores from machine learning

algorithms like decision trees or random forests, or

regularization techniques like L1 (Lasso) regularization. The

choice of criteria depends on the nature of the data and the

specific requirements of the problem.

5. What are the common techniques for Feature Selection?

Common techniques for feature selection include univariate

selection, recursive feature elimination, principal component

analysis (PCA), and feature importance from tree-based models.

Univariate selection evaluates each feature independently based

on statistical tests or scoring methods. Recursive feature

elimination eliminates less important features recursively based

on model performance. PCA transforms the original features into

a smaller set of uncorrelated features. Tree-based models

provide feature importance scores based on the contribution of

each feature to the model's predictions.

6. How to select the optimal number of features?

Selecting the optimal number of features involves a trade-off

between model complexity and performance. This can be determined

using techniques like cross-validation or grid search, where

different subsets of features are evaluated, and the performance

of the model is assessed. The optimal number of features is

typically chosen based on the point where adding more features

does not significantly improve model performance.

7. What are the considerations for Feature Selection?

When performing feature selection, it's important to

consider the specific problem at hand, the characteristics of

the data, and the goals of the modeling task. It's crucial

to strike a balance between the number of features selected and

the predictive performance of the model. Additionally, careful

evaluation and validation of the selected features are essential

to ensure that they generalize well to unseen data.