Best

Generalization

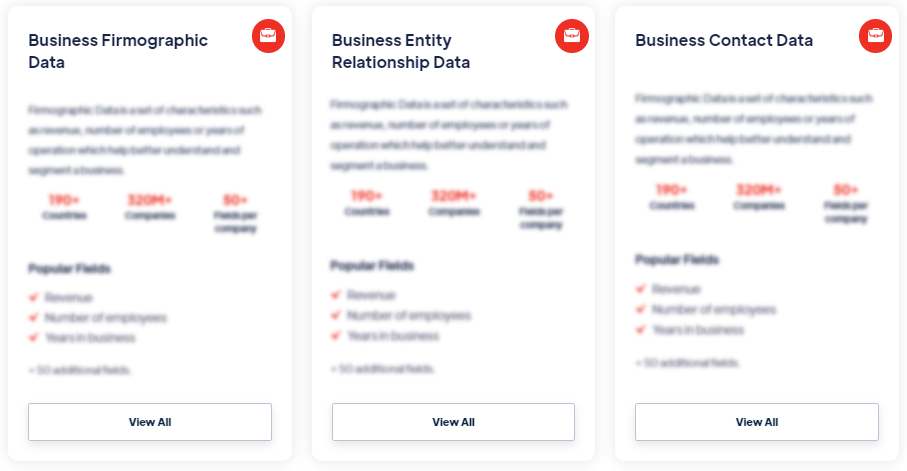

Products

Generalization refers to the ability of a machine learning model to accurately predict or classify unseen data that it has not encountered during the training phase. It involves learning patterns and relationships from a limited set of training examples and applying that knowledge to make predictions on new, unseen data. Read more

Our Data Integrations

Request Data Sample for

Generalization

Browse the Data Marketplace

Frequently Asked Questions

1. What is generalization?

Generalization

refers to the ability of a machine learning model to accurately

predict or classify unseen data that it has not encountered

during the training phase. It involves learning patterns and

relationships from a limited set of training examples and

applying that knowledge to make predictions on new, unseen data.

2. How is generalization achieved?

Generalization is achieved by developing models that capture

the underlying patterns and relationships in the training data

without overfitting. Overfitting occurs when a model learns the

specific details and noise in the training data too well,

resulting in poor performance on new data. Techniques like

regularization, cross-validation, and appropriate model

selection help in achieving better generalization.

3. What are the benefits of good generalization?

Good generalization ensures that a machine learning model

performs well on new, unseen data, which is crucial for its

practical application. It allows the model to make accurate

predictions, generalize insights from the training data to new

situations, handle noisy or imperfect data, and adapt to changes

in the data distribution over time.

4. How is generalization evaluated?

Generalization is evaluated by assessing the performance of a

model on a separate test dataset that is independent of the

training data. Common evaluation metrics include accuracy,

precision, recall, F1 score, and area under the receiver

operating characteristic curve (AUC-ROC). Cross-validation

techniques, such as k-fold cross-validation, can also be used to

estimate the generalization performance of a model.

5. What are the challenges in achieving good

generalization?

Achieving good generalization can be challenging due to various

factors. Insufficient or biased training data can limit the

model's ability to generalize to diverse scenarios.

Overfitting, underfitting, and selection of inappropriate model

complexity can also hinder generalization. Handling

high-dimensional data, imbalanced classes, and noisy or missing

data are additional challenges that can affect generalization

performance.

6. How can generalization be improved?

To

improve generalization, techniques such as regularization,

feature selection or dimensionality reduction, data

augmentation, and ensembling can be employed. Regularization

methods, like L1 or L2 regularization, help prevent overfitting

by adding penalties to the model's loss function. Feature

selection and dimensionality reduction reduce the complexity of

the model and focus on the most informative features. Data

augmentation involves creating new training examples by applying

transformations or perturbations to the existing data.

7. What is the role of hyperparameter tuning in

generalization?

Hyperparameter tuning plays a crucial role in achieving good

generalization. Hyperparameters are settings or configurations

of the machine learning model that are not learned from the data

but set by the user. Optimizing hyperparameters, through

techniques like grid search or Bayesian optimization, helps find

the best settings that lead to improved generalization. Proper

tuning of hyperparameters can prevent underfitting or

overfitting, leading to better performance on unseen data.