Best

Hadoop

Products

Hadoop is an open-source framework designed for distributed storage and processing of large datasets across clusters of computers. It provides a scalable, reliable, and cost-effective solution for big data processing. Hadoop consists of two main components: the Hadoop Distributed File System (HDFS) for storing data across multiple nodes, and the MapReduce framework for parallel processing and analysis of the data. Read more

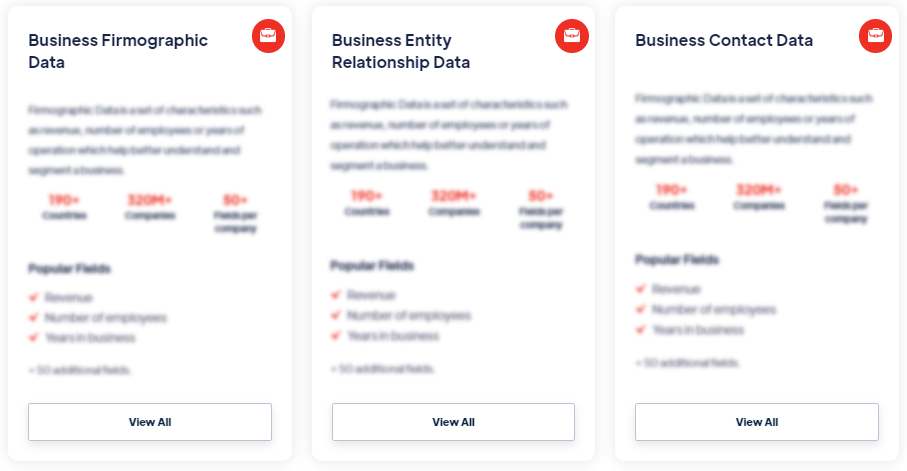

Our Data Integrations

Request Data Sample for

Hadoop

Browse the Data Marketplace

Frequently Asked Questions

1. What is Hadoop?

Hadoop is an

open-source framework designed for distributed storage and

processing of large datasets across clusters of computers. It

provides a scalable, reliable, and cost-effective solution for

big data processing. Hadoop consists of two main components: the

Hadoop Distributed File System (HDFS) for storing data across

multiple nodes, and the MapReduce framework for parallel

processing and analysis of the data.

2. Why is Hadoop important?

Hadoop is

important because it allows organizations to store, process, and

analyze vast amounts of data efficiently. It enables the

processing of data in parallel across multiple nodes, which

significantly improves the performance and scalability of

data-intensive tasks. Hadoop is widely used for big data

analytics, machine learning, data warehousing, and other

applications that require handling and analyzing large datasets.

3. What are the key features of Hadoop?

Key features of Hadoop include scalability, fault tolerance,

flexibility, cost-effectiveness, and parallel processing. Hadoop

can scale horizontally by adding more nodes to the cluster,

ensuring data reliability and availability even in the event of

node failures. It can process various types of data, including

structured, semi-structured, and unstructured data. Hadoop runs

on commodity hardware, making it a cost-effective solution. The

parallel processing capabilities of Hadoop enable high-speed

data processing and analysis.

4. How is Hadoop used?

Hadoop is used for

various applications such as big data analytics, data

warehousing, machine learning, log processing, and clickstream

analysis. It enables organizations to analyze large volumes of

data, store and process structured and unstructured data, train

and deploy machine learning models, process and analyze log

files, and understand user behavior through clickstream

analysis.

5. What are some popular tools and technologies in the Hadoop

ecosystem?

The Hadoop ecosystem consists of various tools and technologies

that enhance the capabilities of Hadoop. Some popular examples

include Apache Hive, Apache Pig, Apache Spark, Apache HBase, and

Apache Kafka. These tools provide functionalities such as

SQL-like querying, high-level scripting, in-memory data

processing, distributed database access, and distributed

streaming.

6. What are the benefits of using Hadoop?

Using Hadoop offers benefits such as scalability,

cost-effectiveness, flexibility, fault tolerance, and parallel

processing. Hadoop can handle massive amounts of data by

distributing the workload across multiple nodes, running on

commodity hardware and open-source software. It can process

various types of data from diverse sources, ensuring data

reliability and availability. The parallel processing

capabilities of Hadoop enable faster data processing and

analysis.

7. What are the challenges of using Hadoop?

Using Hadoop comes with challenges such as complexity, data

management, programming model, and integration with existing

systems. Setting up and managing a Hadoop cluster requires

technical expertise. Proper data management, including

partitioning, distribution, and replication, is crucial for

optimal performance and data reliability. Developing MapReduce

programs or using Hadoop-related technologies may require

specialized programming skills. Integrating Hadoop with existing

systems can be challenging and requires compatibility and data

integration considerations.