Best

Natural Language Processing (NLP) Data

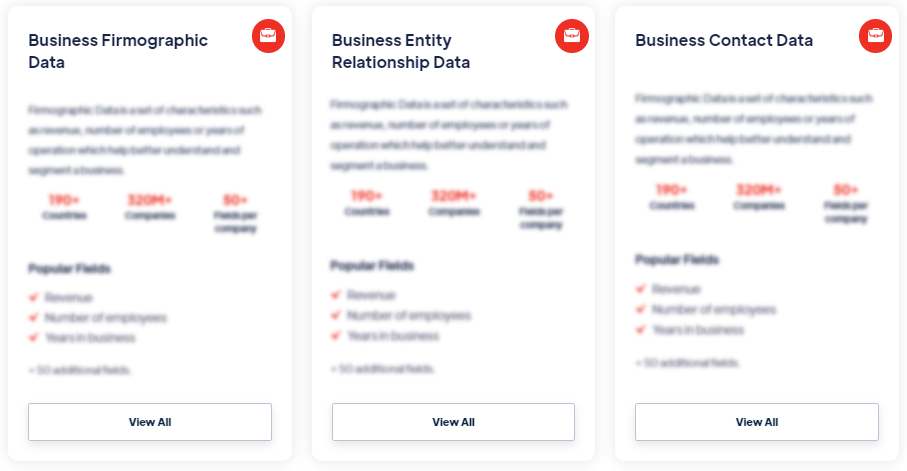

Products

NLP data comprises textual datasets used to train machine learning models or algorithms in the field of natural language processing. It includes various types of text, such as news articles, books, social media posts, customer reviews, or any other form of written or spoken language. The data serves as the basis for training models to understand and process human language. Read more

Our Data Integrations

Request Data Sample for

Natural Language Processing (NLP) Data

Browse the Data Marketplace

Frequently Asked Questions

1. What is Natural Language Processing (NLP) Data?

NLP data comprises textual datasets used to train machine

learning models or algorithms in the field of natural language

processing. It includes various types of text, such as news

articles, books, social media posts, customer reviews, or any

other form of written or spoken language. The data serves as the

basis for training models to understand and process human

language.

2. How is Natural Language Processing Data collected?

Natural Language Processing data is collected from

diverse sources, such as web pages, online platforms, public

repositories, books, articles, and other textual content. Data

collection methods include web scraping, text mining,

crowdsourcing, or obtaining data from pre-existing datasets. The

collected data is typically preprocessed to clean and organize

it before being used for training NLP models.

3. What does Natural Language Processing Data

capture?

Natural Language Processing data captures the linguistic

features, structures, and patterns present in the text. It

includes vocabulary, grammar, syntax, semantics, and contextual

information. The data encompasses a wide range of topics,

styles, and domains to enable NLP models to understand and

interpret language in different contexts.

4. How is Natural Language Processing Data used?

Natural Language Processing data is used to train machine

learning models or algorithms in various NLP tasks, such as text

classification, sentiment analysis, named entity recognition,

machine translation, question answering, and more. By exposing

the models to a large and diverse dataset, they learn to

recognize linguistic patterns, semantic relationships, and

contextual information to perform language-related tasks.

5. What are the challenges with Natural Language Processing

Data?

Challenges with Natural Language Processing data include

data quality, ambiguity, domain specificity, bias, and privacy

concerns. Ensuring the quality and relevance of the data is

crucial for training accurate and reliable NLP models.

Ambiguities in language, such as homonyms or polysemous words,

pose challenges for understanding and disambiguating meaning.

Domain-specific language data may be required to tackle

context-specific tasks. Addressing bias in the data is essential

to avoid biased or discriminatory language processing. Privacy

considerations must also be taken into account when working with

sensitive textual information.

6. How is Natural Language Processing Data analyzed?

Analysis of Natural Language Processing data involves

preprocessing, statistical analysis, linguistic analysis, and

machine learning techniques. Preprocessing steps may include

tokenization, stemming, part-of-speech tagging, and removing

stop words. Statistical and linguistic analysis helps identify

patterns, language structures, and linguistic features. Machine

learning algorithms are then used to train models on the

analyzed data to perform various NLP tasks.

7. How can Natural Language Processing Data improve NLP

models?

Natural Language Processing data plays a crucial role in

improving the accuracy, robustness, and generalization of NLP

models. A diverse and high-quality dataset helps train models to

understand language nuances, adapt to different writing styles,

and handle various linguistic phenomena. By continually updating

and expanding the dataset, NLP models can be refined, enabling

them to perform more accurate and contextually relevant language

processing tasks.