Best

Natural Language Processing (NLP) Training Data

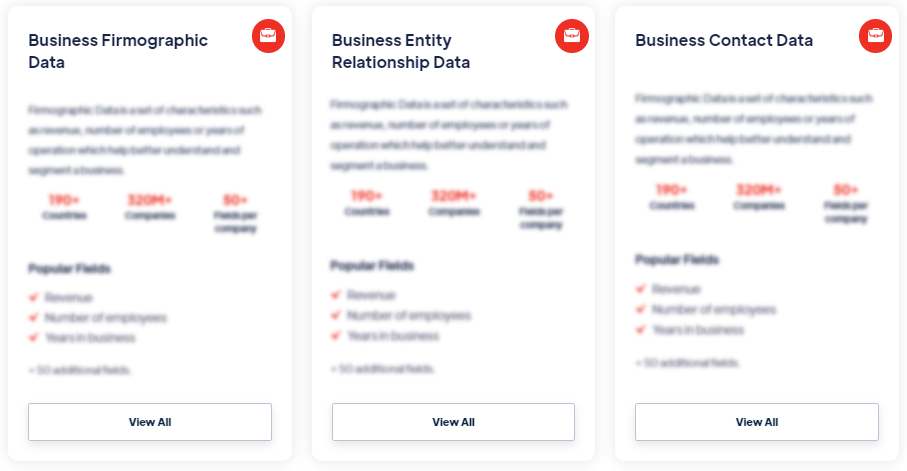

Products

NLP training data consists of text data used to train machine learning models or algorithms in the field of natural language processing. It encompasses a wide range of text sources, such as books, articles, websites, social media posts, customer reviews, or any other form of written or spoken language. The data is essential for teaching models to understand and process human language. Read more

Our Data Integrations

Request Data Sample for

Natural Language Processing (NLP) Training Data

Browse the Data Marketplace

Frequently Asked Questions

1. What is Natural Language Processing (NLP) Training

Data?

NLP training data consists of text data used to train

machine learning models or algorithms in the field of natural

language processing. It encompasses a wide range of text

sources, such as books, articles, websites, social media posts,

customer reviews, or any other form of written or spoken

language. The data is essential for teaching models to

understand and process human language.

2. How is Natural Language Processing Training Data

collected?

Natural Language Processing training data is collected

from various sources, including online platforms, public

repositories, text corpora, and domain-specific datasets. Data

collection methods may involve web scraping, text mining, data

labeling, crowdsourcing, or accessing pre-existing datasets. The

collected data is typically preprocessed and annotated to

enhance its quality and make it suitable for training NLP

models.

3. What does Natural Language Processing Training Data

capture?

Natural Language Processing training data captures the

linguistic patterns, semantic relationships, syntax, grammar,

and contextual information present in the text. It covers a wide

range of topics, styles, and domains to enable NLP models to

understand and generate language in diverse contexts. The data

aims to reflect the complexity and diversity of human language.

4. How is Natural Language Processing Training Data

used?

Natural Language Processing training data is used to

train machine learning models or algorithms in NLP tasks, such

as text classification, sentiment analysis, named entity

recognition, machine translation, question answering, and

language generation. By exposing the models to a large and

diverse dataset, they learn to recognize patterns, extract

information, understand language semantics, and perform specific

language-related tasks.

5. What are the challenges with Natural Language Processing

Training Data?

Challenges with Natural Language Processing training data

include data quality, bias, domain specificity, and scalability.

Ensuring high-quality and representative data is crucial for

training accurate and unbiased NLP models. Addressing biases

present in the data, such as gender or cultural biases, is

important to avoid perpetuating biases in NLP applications.

Obtaining domain-specific data may be necessary to ensure models

perform well in specific industries or specialized domains.

6. How is Natural Language Processing Training Data

analyzed?

Analysis of Natural Language Processing training data

involves preprocessing, exploratory data analysis, statistical

analysis, linguistic analysis, and machine learning techniques.

Preprocessing steps may include text cleaning, tokenization,

lemmatization, and removing stop words. Exploratory and

statistical analysis can help identify data characteristics,

distributional properties, and correlations. Linguistic analysis

focuses on understanding language structures and features.

Machine learning algorithms are used to train models on the

analyzed data.

7. How can Natural Language Processing Training Data improve

NLP models?

Natural Language Processing training data plays a vital

role in improving the accuracy, performance, and generalization

capabilities of NLP models. High-quality and diverse datasets

help models learn language patterns, semantic relationships,

context, and nuances present in different text sources. By

continuously updating and expanding the training data, NLP

models can adapt to new language trends, improve their

understanding of various domains, and deliver more accurate and

effective language processing results.