Best

Overfitting

Products

Overfitting occurs when a machine learning model becomes too complex and captures noise or random fluctuations in the training data, rather than the underlying patterns and relationships. As a result, the model may fail to generalize well to new data. Read more

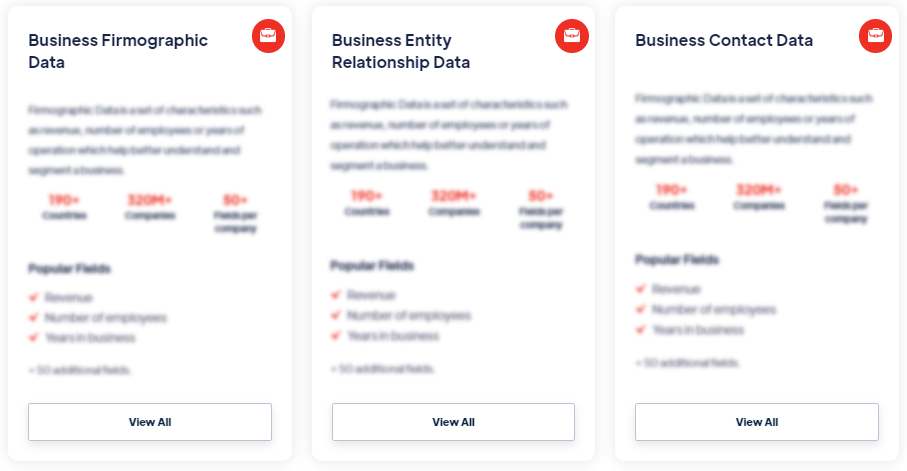

Our Data Integrations

Request Data Sample for

Overfitting

Browse the Data Marketplace

Frequently Asked Questions

1. What is overfitting?

Overfitting occurs

when a machine learning model becomes too complex and captures

noise or random fluctuations in the training data, rather than

the underlying patterns and relationships. As a result, the

model may fail to generalize well to new data.

2. Why is overfitting a problem?

Overfitting is a problem because it compromises the performance

and reliability of a machine learning model. The model may have

excellent accuracy on the training data, but it performs poorly

on new, unseen data, leading to poor generalization and limited

practical usefulness.

3. What causes overfitting?

Overfitting

can be caused by several factors, including an excessively

complex model with too many parameters relative to the available

training data, noisy or irrelevant features in the data, or

inadequate regularization techniques that fail to control the

model's complexity.

4. What are the consequences of overfitting?

The consequences of overfitting include reduced model

performance on new data, increased sensitivity to noise in the

training data, and a higher likelihood of incorrect predictions

or unreliable estimates. Overfitting can lead to poor

decision-making and undermine the usefulness of the model in

real-world applications.

5. How can overfitting be detected?

Overfitting can be detected by evaluating the model's

performance on a separate validation or test dataset that was

not used during training. If the model performs significantly

worse on the validation or test data compared to the training

data, it may indicate overfitting.

6. How can overfitting be prevented or mitigated?

To prevent or mitigate overfitting, several techniques can be

employed. These include collecting more training data to provide

a broader representation of the underlying patterns, simplifying

the model structure or reducing the number of parameters,

applying regularization techniques such as L1 or L2

regularization, using cross-validation to assess model

performance, and employing ensemble methods that combine

multiple models to reduce overfitting.

7. What are the trade-offs in addressing overfitting?

Addressing overfitting involves finding a balance between model

complexity and generalization performance. Simplifying the model

or applying stronger regularization techniques can help mitigate

overfitting but may result in a slight decrease in training

performance. Striking the right balance ensures that the model

captures the underlying patterns while avoiding the capture of

noise or irrelevant details.