Best

Speech Synthesis Data

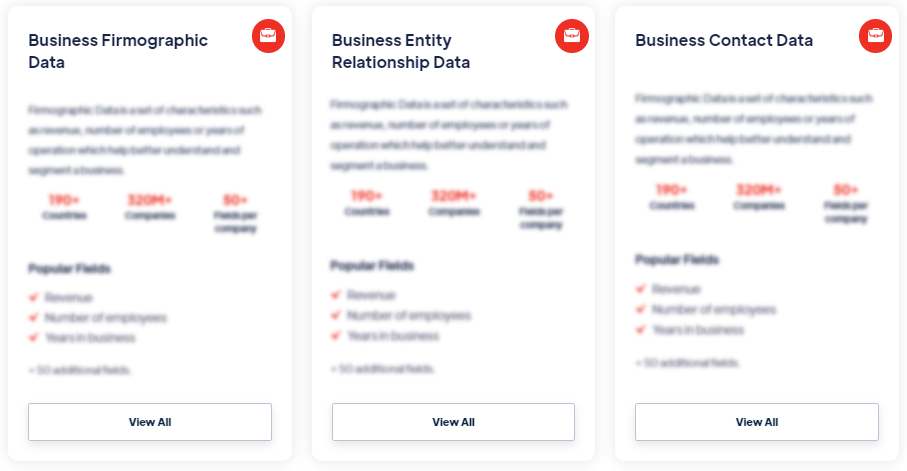

Products

Speech synthesis data typically includes pairs of text and corresponding audio recordings. The text can range from simple sentences to longer paragraphs or even entire books. The audio recordings consist of human voices pronouncing the text samples. The dataset may also include additional metadata, such as the speaker's identity or linguistic annotations. Read more

Our Data Integrations

Request Data Sample for

Speech Synthesis Data

Browse the Data Marketplace

Frequently Asked Questions

1. What Does Speech Synthesis Data Include?

Speech synthesis data typically includes pairs of text and

corresponding audio recordings. The text can range from simple

sentences to longer paragraphs or even entire books. The audio

recordings consist of human voices pronouncing the text samples.

The dataset may also include additional metadata, such as the

speaker's identity or linguistic annotations.

2. Where Can Speech Synthesis Data Be Found?

Speech synthesis data can be obtained from various sources.

Some common sources include open-source TTS projects, research

institutions, companies specializing in speech synthesis

technology, and crowdsourcing platforms where individuals

contribute their voice recordings for synthesis training.

3. How Can Speech Synthesis Data Be Utilized?

Speech synthesis data is used to train machine learning models,

particularly neural network-based models, for text-to-speech

synthesis. The text and audio pairs are used to teach the model

how to generate natural-sounding speech from written text. The

models learn the relationships between linguistic features in

the text and corresponding acoustic patterns in the audio.

4. What Are the Benefits of Speech Synthesis Data?

Speech synthesis data enables the development of high-quality

and natural-sounding speech synthesis systems. By training

models on diverse text and audio samples, the systems can

generate speech that sounds human-like, with appropriate

intonation, pronunciation, and prosody. This technology has

applications in various domains, including assistive technology,

accessibility, voice assistants, multimedia, and more.

5. What Are the Challenges of Speech Synthesis Data?

Obtaining high-quality and diverse speech synthesis data can be

challenging. The dataset needs to cover a wide range of

linguistic and acoustic variations, including different

languages, accents, speaking styles, and emotional expressions.

Collecting and annotating such data at scale can be

time-consuming and resource-intensive. Additionally, ensuring

data privacy and addressing potential biases in the dataset are

important considerations.

6. How Can Speech Synthesis Data Impact Technology and

Applications?

High-quality speech synthesis data contributes to the

development of more natural and expressive speech synthesis

systems. These systems can enhance applications such as voice

assistants, audiobook narration, interactive voice response

(IVR) systems, multimedia content creation, and more. They also

have the potential to improve accessibility for individuals with

speech impairments or reading difficulties.

7. What Are the Emerging Trends in Speech Synthesis Data?

Emerging trends in speech synthesis data include the

development of multilingual and cross-lingual datasets to

support speech synthesis in different languages. There is also

growing interest in generating expressive and emotionally rich

speech, enabling TTS systems to convey different moods,

attitudes, or speaking styles. Additionally, there are ongoing

efforts to reduce data requirements and improve transfer

learning techniques to enable more efficient and personalized

speech synthesis.

â€