Best

Validation Set

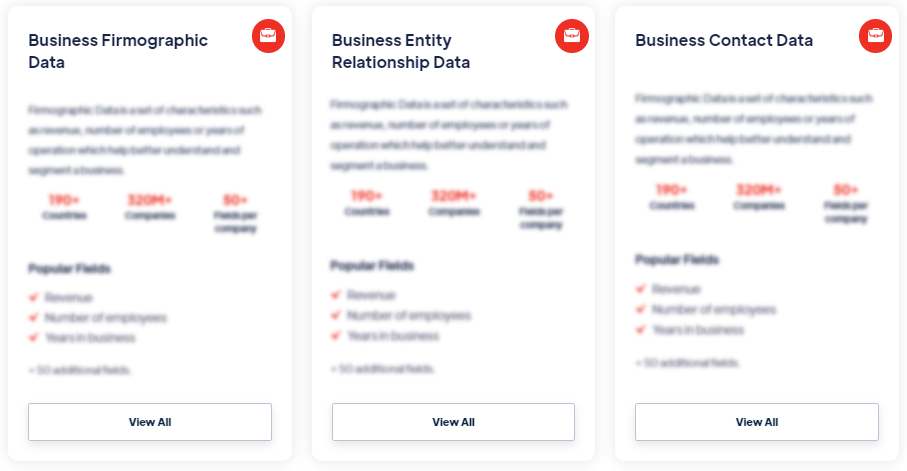

Products

A validation set is a subset of the available data that is used to evaluate the performance and tune the hyperparameters of a machine learning model during training. It helps estimate how well the model will generalize to new, unseen data. Read more

Our Data Integrations

Request Data Sample for

Validation Set

Browse the Data Marketplace

Frequently Asked Questions

1. What is a validation set?

A validation

set is a subset of the available data that is used to evaluate

the performance and tune the hyperparameters of a machine

learning model during training. It helps estimate how well the

model will generalize to new, unseen data.

2. What is the purpose of a validation set?

The main purpose of a validation set is to estimate the

performance of a model on unseen data. It helps in assessing how

well the model generalizes and allows for the selection of

optimal hyperparameters.

3. How is a validation set created?

A

validation set is created by partitioning the available data

into three sets: training, validation, and test. The validation

set is usually a smaller portion of the data and is kept

separate from the training and test sets.

4. How is a validation set used in hyperparameter tuning?

The validation set is used to evaluate different combinations

of hyperparameters. By comparing the model's performance on

the validation set for each combination, the best

hyperparameters can be selected.

5. How does the size of the validation set affect the

model?

The size of the validation set should be large enough to

provide a representative estimate of the model's

performance but small enough to leave sufficient data for

training. It is typically smaller than the training set.

6. How does a validation set prevent overfitting?

By evaluating the model on a separate validation set, it helps

identify if the model is overfitting the training data.

Adjustments can then be made to the model or its hyperparameters

to prevent overfitting and improve generalization.

7. What evaluation metrics are commonly used with a

validation set?

Various evaluation metrics can be used depending on the

specific problem and the nature of the data. Common metrics

include accuracy, precision, recall, F1-score, mean squared

error (MSE), or area under the ROC curve (AUC-ROC). The choice

of metric depends on the specific goals of the model and the

nature of the data.