Best

Web Scraping

Products

Web scraping is the automated process of extracting data from websites. It involves writing code or using scraping tools to retrieve information from web pages and save it in a structured format, such as a spreadsheet or a database. Read more

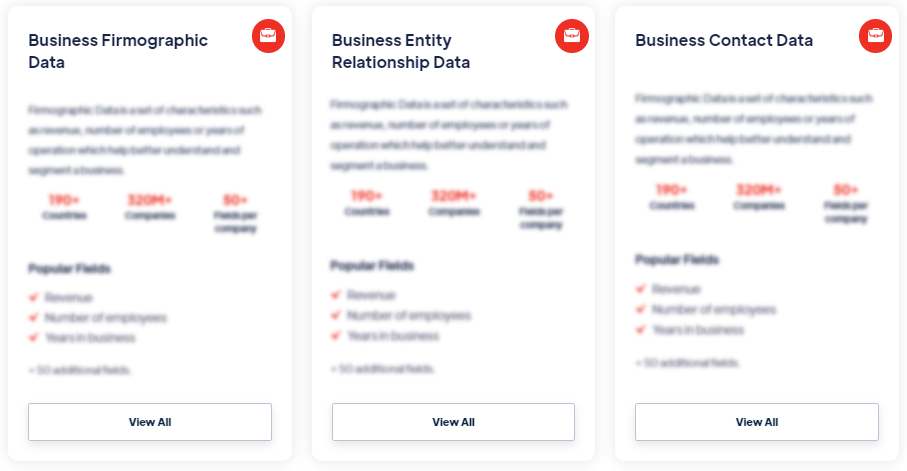

Our Data Integrations

Request Data Sample for

Web Scraping

Browse the Data Marketplace

Frequently Asked Questions

1. What is web scraping?

Web scraping is

the automated process of extracting data from websites. It

involves writing code or using scraping tools to retrieve

information from web pages and save it in a structured format,

such as a spreadsheet or a database.

2. How is web scraping done?

Web scraping

can be done using various programming languages, libraries, and

tools. Commonly used tools include Python libraries like

BeautifulSoup and Scrapy, as well as browser extensions and

software applications specifically designed for web scraping.

These tools allow developers to navigate through web pages,

extract relevant data, and save it for further analysis.

3. What can be scraped from websites?

Almost any information available on a website can be scraped,

including text, images, links, tables, product details, reviews,

contact information, and more. However, it's important to

respect website terms of service and applicable legal

regulations when scraping data.

4. Is web scraping legal?

The legality of

web scraping varies depending on factors such as the

website's terms of service, copyright laws, and applicable

regulations in your jurisdiction. While scraping publicly

accessible data is generally considered legal, it's

important to review the website's terms of service and

consider any specific guidelines or restrictions provided.

5. What are the challenges of web scraping?

Web scraping can present challenges due to variations in

website structures, dynamic content, anti-scraping measures,

CAPTCHAs, rate limiting, and changing HTML layouts. Developers

may need to handle these challenges by implementing techniques

such as handling cookies, using proxies, or parsing

JavaScript-generated content.

6. How is web scraping used?

Web scraping

is used for various purposes, including market research, data

collection for analysis, competitive intelligence, lead

generation, sentiment analysis, content aggregation, price

comparison, monitoring online presence, and more. It enables

businesses to gather valuable data from the web and gain

insights that support informed decision-making.

7. What are the best practices for web scraping?

When engaging in web scraping, it's important to adhere to

ethical and legal guidelines. Some best practices include

respecting website terms of service, avoiding excessive requests

that could impact website performance, using appropriate

user-agent headers, handling data responsibly, and being mindful

of privacy considerations.